Category: Theory

-

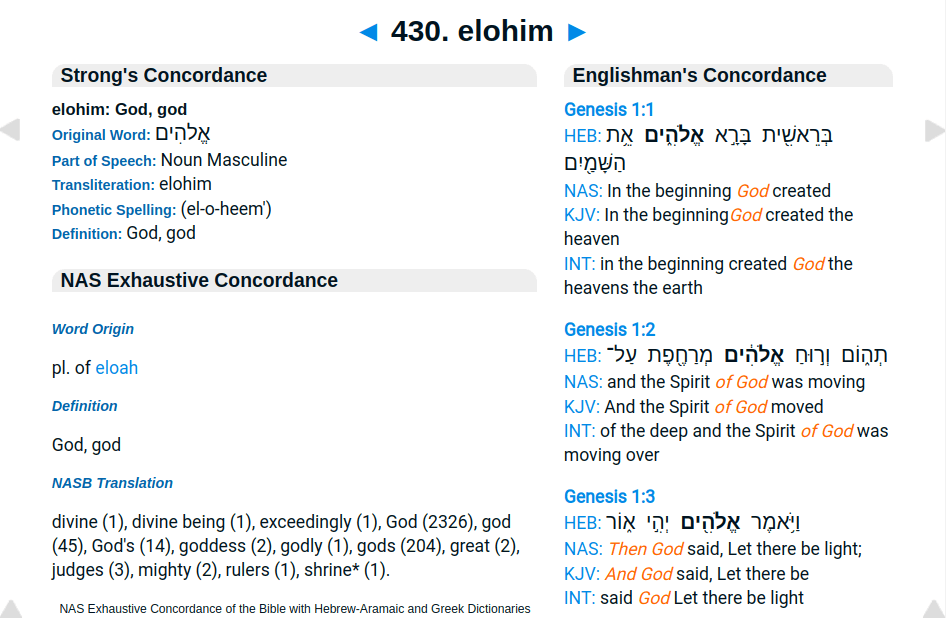

Several Bible Study Tools and Tricks

There are a lot of great free Bible study resources online. Since I am about to start a new two-year (but in-depth) Bible study on April 2, I thought I would give you […]

-

If Truth Is Complex, Why Is Fact-Checking So Simplistic?

For the last several years, powerful media and government organizations have been sounding the alarm with increasing urgency about what they are pleased to call “disinformation.” Defined in various ways, the main thing […]

-

Is There an Exit from Search Hell?

I would not use Google; it’s both censorware and spyware. And I would not trust Bing, or Yahoo, which is powered by Bing, for the same reason. Such reasoning is why many of us switched to DuckDuckGo in the last few years, despite the fact that Bing is one of their sources. In addition to…

-

Why Neutrality

I drafted this article for Ballotpedia.org, where it first appeared December 2015. I since published a slightly updated version (not the one below) in Essays on Free Knowledge. As a teenager, I habitually scanned […]

-

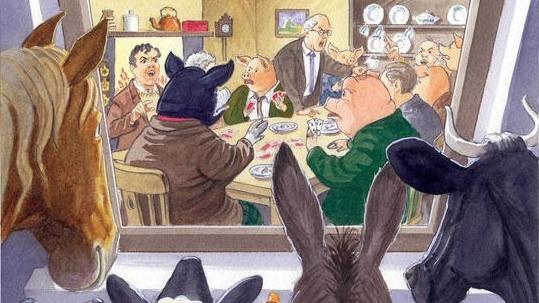

Wikipedia Is More One-Sided Than Ever

“All encyclopedic content on Wikipedia,” declares a policy page, “must be written from a neutral point of view (NPOV).” This is essential policy, believe it or not. Maybe that will be hard to […]

-

A first attempt at using WordPress for microblogging

Here is the brand spanking new Larry Sanger Microblog, which lives at a domain I had sitting around doing nothing: http://StartThis.org. As you’ll see, it looks a little like a social media feed. […]