tl;dr:

- Defending Knowledge Freedom – Rising censorship and content alterations threaten access to classic works, encyclopedias, and foundational texts.

- Offline Digital Libraries – ZWIBook flash drives already distribute 69,020 books, preserving knowledge against censorship and disasters.

- Distributed Data Networks – I propose decentralized systems to store and share encyclopedias, books, and archives for permanent public access.

- Practical Preservation Tools – Creating affordable drives and servers for redundant data backups, safeguarding cultural and intellectual heritage.

- Call for Action and Support – Donors and supporters can help build this infrastructure to protect knowledge and secure freedom of information.

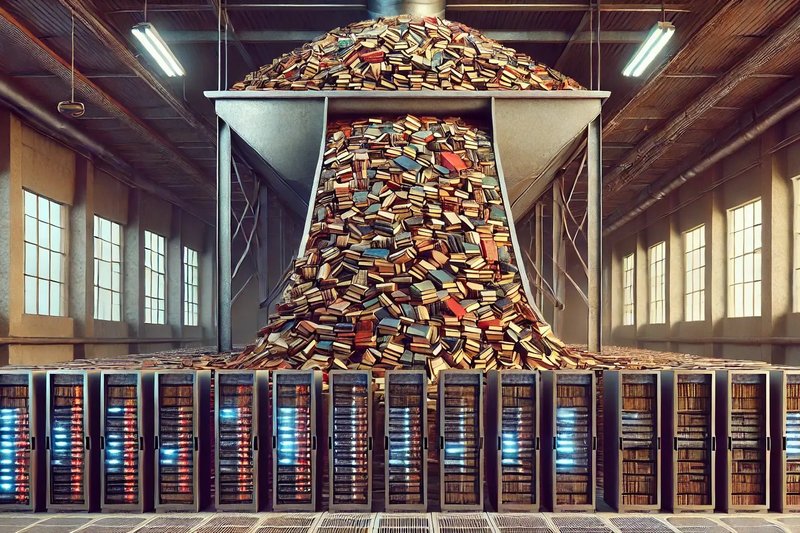

IMAGINE that each free encyclopedia and public domain book could be found in thousands of copies, all around the world, making it permanently impossible to censor them. What if we were to create a system in which redundant digital libraries each had a complete copy of this massive knowledge trove, all operating on common standards and protocols? Could you, as a good digital citizen, support the same effort by devoting a portion of your hard drive to making the seeds of knowledge even more broadly distributed?

Think this is unnecessary? Think again. Modern civilization around the globe has been moving in a dangerous direction, restricting the means of knowledge.

The Attack on Freedom of Knowledge

Just ten years ago, freedom of speech was defended zealously by both left and right in the United States. Now it is widely thought to be a conservative issue. In a few short years, the United Nations and other NGOs have ramped up the calls for attacks on “misinformation.” The U.S. government has shown alarming willingness to do their bidding; think of the Twitter Files and how Wikipedia’s Katherine Maher admitted to coordinating with government agents to censor “misinformation.” Australian legislators proposed a bill granting the Communications and Media Authority the power to fine tech companies for posting what they deem to be harmful false content. And the content itself can be changed. Wikipedia is only the most famous example. But the rot has spread even to books. Roald Dahl’s classic Charlie and the Chocolate Factory and The Witches are being bowdlerized, as are Ian Fleming’s James Bond books, Hugh Lofting’s Doctor Doolittle series, and even Mark Twain’s Adventures of Huckleberry Finn.

As disturbing as these developments are—and these are just a few of hundreds of similar stories—it could get much worse. Bear in mind that the Establishment Left fawns over the Chinese government. Yet look at what that could mean. The Chinese Communist Party has banned online sales of the Bible, as religious persecution “intensifies.” Consider how unwoke and unreconstructed the Bible is, and how hated traditional Christianity is by many elements of the woke left; is it any surprise if radical activists are now targeting the Bible itself? As left-leaning as Wikipedia is, it has been restricted by many governments, including China. And we must not overlook the extent of outright book censorship under repressive regimes historically: the Soviets, the Nazis, and the Chinese under the Cultural Revolution restricted many books regarded as important to basic education by free Westerners. Good luck finding many of the familiar classics, or even many inconvenient facts, if a totalitarian regime gains control.

We must preserve our knowledge.

To this end, the latest project of the Knowledge Standards Foundation is the creation of ZWIBook (128GB) flash drives, which include 69,020 books with bespoke reader software. They have been selling ($50 or $100) reasonably well, with over 300 units sold in the last three months, with very little publicity. These portable libraries allow ordinary people to participate in the preservation of the foundational texts of Western civilization. The more copies of these books (sourced from Project Gutenberg) there are in circulation, the more we have “future proofed” Western civilization itself. In other words, multiple, independent, offline copies of this crucial data are distributed to hundreds (hopefully, in time, thousands; dare we hope millions?) of different people around the world, and that ensures that these books will live on, digitally, beyond all manner of censorship and disasters short of the literal end of the world. The added advantages for education and the preservation of the good aspects of culture are obvious.

We have also started EncycloSearch.org and EncycloReader.org, the first two nodes of a network of free archives of dozens of encyclopedias. This is important work, for which we received significant funding from FUTO.org.

But we at the Knowledge Standards Foundation want to do more.

And we as a society must do more.

We recited, above, some increasing restrictions on political speech inconceivable just ten years ago. But for me, the straw that really broke the camel’s back was the recent attacks on the Internet Archive. So we have come to a firm conclusion:

It is top priority that we make as many copies of the free data of civilization as possible, beginning with but not restricted to encyclopedias, and we must distribute these copies as widely as possible.

This has been the Knowledge Standards Foundation’s mission since 2019.

Freedom-loving geeks always believed that decentralized data is worthwhile. Back in the salad days of the open source software movement of the 1990s and early 2000s, before the rise of centralized Big Tech, we thought this was the natural way of things. We said that widely distributed data in multiple copies—like free software, Usenet, blog aggregators, BitTorrent, Freenet, and of course the very DNS system that runs the internet—was essential to the uncensorable nature of the internet. Now that so much data has moved to “the cloud,” we can no longer blithely assume that “information wants to be free.” My, how times have changed.

Putting the data “on chain” is the go-to of crypto entrepreneurs, but, I’m sorry to say, this solves nothing. Tokenization of content does not necessarily distribute it in many independent copies. And many copies can be distributed without tokenization. Most blockchains, it seems, exist in only one copy, and ironically, that copy is not infrequently found on centralized, corporate Establishment systems like AWS.

The Proposal in General: Download and Serve Up Knowledge

We have a proposal for potential major donors to the KSF: Together, we will build a prototype of an extensible system that would, in time, make all the media of civilization widely distributed.

We would kick-start the infrastructure that could, in time, create multiple independent backup copies of the Internet Archive. Depending on what donors decide to fund, we’ll design and build for you (and the world) the physical backup media, or servers, or both, that will help seed free, digitally-signed copies of encyclopedias, books, social media data, and other of the public data we decide to save.

We may break this ambitious project into a few steps and options. The donor could make these decisions if desired, or leave them up to us; the KSF is enthusiastic about everything described here.

Step one: Put a massive archive on storage media, and make this available for sale. Grab a bunch of files and standardize them as needed. Our initial goal would be to transfer any desired files to locally-hosted servers and to digitally sign copies of them, typically in the ZWI file format. Once the files were made and stored on a local server, we would transfer them to storage media (SSDs, HDDs, or flash drives) and make them available for purchase.

Decision: Archive only, or library as well? At this point, the donor would decide whether to focus primarily on library or archival functions. An archival system could be entirely offline, or online but in harder-to-access forms. It could be retrofitted later with a network/library. The ZWIBook project acts as a model of this type of system, although it has only 69,020 book files. We hope sometime soon to retrofit it with file seeding, so the files are more easily accessible to everyone. Like ZWIBook, such an archive would be faster and cheaper to build and certainly would help preserve the data, but it would not be the network that needs to exist. For that, we need a library system. We need to build the first node in what would become, we hope, a network of redundant servers in the hands of many different entities; the Encyclosphere server network acts as a fairly mature model, although it features only a few terabytes at present. As more and more of such servers came online, found in many jurisdictions (and supported by individual desktop software: see below), the world would be guaranteed access to a wide variety of data.

Decision: Focus on encyclopedias, books, or other media?

As to encyclopedias, the KSF’s primary expertise is in scraping and making signed digital files (ZWI files) of online encyclopedia articles, for both open content encyclopedias such as Wikipedia and Ballotpedia, as well as metadata (and archival copies of) proprietary but free-to-read encyclopedias such as Stanford Encyclopedia of Philosophy. We also have some recent experience converting PDFs of old public domain encyclopedias into the same ZWI format, although this granular (per-article, not per-book) approach is more difficult and still under development. (See our work on Oldpedia.org.) For a donor who wants to support the expansion of this work by building a massive Encyclosphere server they control, we can ramp up any or all aspects of this work and bring it into a higher state of completion. And the data could be prepared for use in training LLMs.

As to books, we have also learned a great deal about working with Project Gutenberg and have gained some of the expertise needed to create an online, distributed version of ZWIBook, which would serve as a high-speed and multiply redundant distribution network for Project Gutenberg. We have some—limited—experience with Internet Archive, but are eager to create mirrors of their public domain books and, perhaps, other media. One essential decision to make, should we go that route, is the format in which to store the books. The choices include (a) ZWI and EPUB, or (b) PDF:

(a) If ZWI and EPUB, then we would use OCR software to export the books to a format like that used for ZWIBook. An thorough and automatic conversion of PDFs to human-usable HTML is badly needed, but there are hard challenges.

The advantages include that the files would be much smaller than those using images of each page, so that it would require less storage space to include them. This means that many more people could hold copies of all those books, which is an advantage that must not be underestimated. Only relatively wealthy people and institutions could afford the servers (and their hosting and management) needed to access a PDF-only collection. Another advantage is that ZWIBook software could be used as a book reader. Moreover, it is fairly trivial to convert from ZWI to EPUB, meaning EPUB readers could also be used. Once such a network were in place, then there would be ultimately something like 1.6 million books with user notes, highlights, and bookcase data shareable across the same network. (We could simply standardize the ZWIBook practices.) If ZWIBook’s 128GB for 69K books gives us the GB-per-book conversion rate, then the space required for Internet Archive’s public domain book collection would be approximately 3TB—which is an amount many people can afford. One might sell a hard drive with all of those books for a few hundred dollars. Assuming that the systems shared book files and associated data, this would also have potentially significant social networking effects that would redound to the benefit of students and scholars alike.

The disadvantages are, however, numerous; the project would be hard. For one thing, it would result in lossy files. High-quality PDFs have information that is simply lost in converting to Project Gutenberg-style HTML files. Moreover, the files would have some mistakes; software for catching and quickly addressing the mistakes is not mature. Still, OCR has remarkably improved in recent years and has become almost perfect, when the book scans are of adequate quality. However, not all of them are suitable for reliable OCR. For these, we would still need to include the PDFs. The biggest disadvantage of storing Internet Archive books in ZWI/EPUB format might be that the whole collection of public domain Internet Archive books would involve a great deal of time and energy to do the conversion.

If we did go this route, we would still want to put all the PDFs on archive-quality tape drive. Copies of such tape drives could be sold and distributed.

(b) If PDF, the project is simpler.

The advantages include that there would not need to be any time coding and executing a massive project to convert PDFs to ZWI/EPUB. Hence, the project would probably be quite a bit cheaper, on the whole, in terms of up-front costs. There would also be possibly significant advantages involved in retaining the same source format (PDF) of Internet Archive itself. This means that the network would simply expand on what the Internet Archive has already started.

The disadvantages are that, without high-quality HTML in EPUB and ZWI format, the books would remain just as hard-to-use as they are at present. With redundant copies seeded by a network of servers, the books might open and download faster than with Internet Archive, but from the end-user’s perspective, there would be no tangible advantage over the existing systems—apart from the presence of multiple copies. That suggests that the main advantage of a PDF-only project would be archival, rather than use. If the books are not available in a more machine-processable format, the social network effects of their easier availability are not experienced. But at least they would be saved, and hopefully, again, in multiple copies. The failure to obtain network effects is a different problem from securing the books against censorship or restriction, which is perhaps the higher priority.

Step two: Develop local and/or internet software. The project, whether archive-only or library as well, would require software to access the files, of course. So, what sort?

Generally speaking, archival projects (which would aim to fill up removable media and/or tape drives with terabytes of files) would have only local access, or very rudimentary remote access (such as command line or API). The PDFs themselves could be inexpensively saved to tape drive. Even so, we could aim in various ways to make the archive faster and more user-friendly than the Project Gutenberg search. A more Encyclosphere-oriented archival project makes little sense, since the format is human-usable and not just archival; still, the archive would certainly have a copy of the Encyclosphere files, and making a complete copy of Wikipedia, together with all its history, is a worthwhile project that is an archival project.

If the donor prioritized public library functions over archiving functions, we would instead write server software that would both make search and reading features available online. This is finished for encyclopedia articles (i.e., see EncycloSearch, EncycloReader, and ZWINode with demo here), and ZWIBook can be rewritten as a web server software.

If there were a focus on an encyclopedia library (the Encyclosphere), then another option is to install WordPress on the server, permitting users to use the KSF’s EncycloShare plugin. This allows users to push encyclopedia articles from blogs directly to EncycloSearch and EncycloReader, or to any ZWINode installation. ZWINode also permits direct upload of articles, but this is not as feature-rich as WordPress.

One top priority for any web-connected library would be to seed the files via (as desired) BitTorrent, WebTorrent, or IPFS. This is essential to the project if it has any sort of network component, although not if the file collections are deliverable only in the form of removable or archivable tape media (which is a very worthwhile project as well).

We would love to build a proper online library system, of the sort Internet Archive ought to (but does not) have. With uptake from multiple content hubs, such servers and seeded files would become the backbone of a new kind of distributed content system. This system would let humanity escape the walled gardens of Big Tech. With enough servers in place and enough copies of the files being seeded, developers everywhere could rely on the files being there, in arbitrarily many copies, supporting the development of all sorts of tools that would give ordinary people free access to the data. EncycloReader.org and EncycloSearch.org are good demos of what we mean. But there need to be similar aggregators for books, social media, videos, and other categories of content.

Deliverables

Our essential deliverables to the KSF donor(s) would be two items:

(1) Backup media and/or servers, with supporting software. With a more minimalistic archival-type system, we would deliver (a) many terabytes of high-capacity backup media (probably RAID arrays of high-quality HDDs, but could be loose hard drives, tape drives, or SSDs if desired), without advanced software. With a RAID array of HDDs or SSDs, at a minimum, there would be the ability to seed the files on BitTorrent and a search facility that makes use of the file metadata. With a more feature-rich library-type system, we would deliver (b) more advanced software, but (depending on funding) possibly fewer terabytes of data. The library type, (b), is a much bigger project than the archive type, (a).

In other words, we can focus on data collection or software. Doing both (a) and (b) to any great extent would take much longer and cost more, but is very much worth doing. Either type of project is a major undertaking, and executing the whole of my vision (see below) is ultimately a years-long project, which would need at least a few million dollars to complete. We can start small, however, and, as with ZWIBook, do good in increments.

(2) A production and sales system for the same, online and running. We will set up production and sales procedures allowing the public to purchase of the backup media and/or servers. In other words, we do not want to build a one-off of the system. You would be supporting the KSF in the development of this system precisely in order to enable people to participate in the creation of a new, decentralized content network.

I realize that the above is underspecified. I have left it so, so that we can discuss and pin down the details. But, that being said, without knowing what, precisely, the proposal is or how much funding we will have to work with, we cannot specify a time frame for delivery. But we will not take on this project until we have specified a reasonable (and tested) time estimate for the work. We will commit to the providing the deliverables according to spec within a set amount of time. Without a major financial commitment, we do not want to launch an open-ended project. But we can agree to accept donations in tranches, dependent on our progress. We would also be quite willing to take on a relatively small project, or to take on parts of the overall project piecemeal—however you would like to structure your donation. We would be happy to deal with individuals or foundations. We will not make strings-attached arrangements with large corporations or governments. This project must be independent from the outset.

Personnel and Final Considerations

In addition to myself, I have two experienced senior developers, who have proven their ability with EncycloReader and EncycloSearch, as well as a senior network engineer as a board member who has agreed to help if needed; as an expert at networking, he can structure and build large server clusters, racks, hosting, and more. The two aforementioned developers also have experience with server purchasing and construction. I can help design and write bug-free and open source front end software, and of course I would lead and help promote the project.

I have left decisions open, because I sense that more funding might be available if we adopt one focus or another according to the preferences of donors. Everything I have described on this page badly needs doing, so I am very comfortable leaving that choosing up to donors. That said, if you were to say that, for example, you would like to donate $100,000 for whatever we think can be built in that time, leaving it up to us, then we would write up a specific plan and work to deliver a certain product in a given time frame.

Philanthropists, this is work that needs to be done; but we simply cannot afford to take it on without your financial help.

This is an opportunity to change the world and build the freedom technology that the world greatly needs. Please get in touch with me at [email protected] and let’s work out the details.

Leave a Reply