Category: Theory

-

Why I Have Not Been a Christian, and Why That Might Change

A Personal History of My Nonbelief I think I lost the faith I was raised in (Lutheran) when I was 16, a few years after the family stopped going regularly to church. That […]

-

How I’m Reading the Bible in 90 Days

The Bible is easily the most influential book of Western literature. If you haven’t read any part of it at all, you aren’t educated, period. But, for that matter, if you haven’t read […]

-

A Theory of Evil

First posted Aug. 16, 2019. Revised and reposted Nov. 4. Good to read alongside “Why Be Moral.” For a long time, the nature of evil eluded me. But dark contemplation of the Jeffrey […]

-

Big Tech In Decline?

Massive Shakeup of Major Players Under Way, Especially in Social Media LarrySanger.org does not usually break news. But since this is such a huge story and no other outlets seem to be covering […]

-

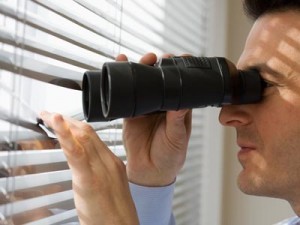

Constantly monitor those in power

“Quis custodiet ipsos custodes?” That’s a question we should be asking more in this day and age of constant surveillance. I’m toying with a proposal: Anyone who goes into public office should have […]

-

On the misbegotten phrase “surveillance capitalism”

The loaded phrase surveillance capitalism has been in circulation since at least 2014, but it came into much wider use this year with Shoshana Zuboff’s book The Age of Surveillance Capitalism. The phrase […]

-

The challenges of locking down my cyber-life

In January 2019, I wrote a post (which see for further links) I have shared often since about how I intended to “lock down my cyber-life.” That was six months ago. I made […]

-

On the clash of civilizations

There is a global conflict underway. A good way to understand it is by looking at the different interests that are coming into conflict. And a good place to begin is, of course, […]